How AI’s Role Evolves Within HR

You can now see how AI acts more like an HR assistant than a replacement for your position. By 2025, managers stop seeing it as just a cost cutter. It already outgrew easy chatbots and now helps you shape stronger workplace plans. It’s moving so fast. Most HR leaders I talk to say the shift feels as dramatic as moving from paper files to cloud apps.

It matches candidates to roles with skills you might miss on paper. Some systems even flag signs of stress by tracking how people communicate and work. Ask your vendor whether they offer health tracking, too. That bonus alone can flag burnout weeks before it shows up in sick-day spikes.

You hear this a lot — a recruiter told me last week how much her work has changed. She used to spend mornings copying data between systems, and now she spends that time connecting one-on-one with candidates because the AI handles the paperwork. She told me it feels like she finally gets to do the job she was hired for in the first place.

You can’t automate all HR tasks. Humans aren’t going anywhere, and you still need a human touch for performance reviews and for settling conflicts. Those parts demand the emotional intelligence that machines don’t pick up on yet. Your team could learn from a large healthcare company that let people lead the conversations while AI scanned workforce patterns and cut turnover by 23% last year.

Top organizations go past simply buying AI tools. They decide which tasks stay with people. It’s not about AI versus humans. They map out a simple matrix. Repetitive data entry goes to the machine, while delicate feedback sessions stay with the manager.

Employees also want to know when algorithms call the shots. Give clear explanations whenever AI influences hiring, promotions, or career growth so the process stays transparent. This openness doesn’t slow decisions — it builds trust and makes rollouts smoother when the next tool lands on everyone’s desktop.

Let people see exactly where AI steps in, and you’ll keep confidence high across the board.

Clear and Transparent Decision Practices

You want to know how the AI uses your info when it makes decisions at work. Say you apply for ten jobs, get rejected from each one, and weeks later learn the AI software watched your video interview and decided you weren’t a fit. That stings.

Ethical businesses say up front that the AI will review your application. They show you what the technology checks for and how much it counts in the final call.

You cut down on doubt when you share how the system runs. Employees question the processes they can’t see. That doubt can dent the company culture or push good people away. A recent survey found that teams with open AI setups kept 23% more staff than the ones that kept the process hidden. That reaction is normal in any workplace.

Real transparency starts when you tell employees which data points guide decisions about them. You also explain how much each factor weighs and when a human manager steps in to review the results. That clarity builds trust and shows you respect their time and skills.

You might worry about dumping too much technical detail — most people just want the basics. Will the AI flag you if you skip keywords? Does it track how you speak up in meetings? Ask for that info so you never run into surprises.

You gain even more when you follow openness. People know the rules of the game and feel in control of their career path. They can focus on real work instead of trying to work around invisible systems. Openness also sparks new ideas as people start to trust the tools. Put openness first, and watch how it pays off.

You also help your HR team make decisions that are easier to explain and defend. They can find these problems sooner and build a reputation for fairness that pulls in top talent.

Algorithmic Bias Detection and Reduction

You can easily miss the bias in AI until you take a closer look at what it’s really doing. HR teams learn this lesson pretty fast when their shiny hiring tool starts favoring candidates from certain zip codes without any clear reason. The signs are there all along.

You can use AI to screen resumes, and it can quietly reject people from areas that have had higher turnover in the past. In many cases, that turnover often traces back to a long commute or unreliable childcare, not their ability for the job. After all, why should a bus path ever choose someone’s career? Hidden links like that create deeply unfair results.

You can spot this early by comparing how the system treats different groups with fairness metrics. Tools like Aequitas help you see if your model gives everyone a fair shot or if you need to tweak the settings before any real harm spreads.

Your team catches deeper problems when HR works closely with data scientists and legal experts to dig into the facts. No one wants to stand in court to explain why a supposedly neutral algorithm hired 90% men in a field that’s half women. That’s inviting a lawsuit.

Biased processes also drive away talent. A rejected candidate who feels mistreated won’t try again and will tell others about it, online and offline. No one wants to work at that place anyway. Before you know it, your employer brand takes a visible hit.

Keep people up-to-date. Add human checks so someone can ask tough questions when AI makes odd recommendations. Ask why it low-rated a candidate and what drove that choice. Run standard audits to catch problems early. External reviewers find patterns that get lost when you’re too close to the data. Diverse teams bring fresh ideas and tap into a wider talent pool while competitors chase the same small group.

Human Oversight in Critical Decisions

You still need a human touch when the AI makes recommendations at work. Even if the algorithms crunch the data faster, they can’t replace your judgment. Keep an eye on both the numbers and the people. We see automation go off track all of the time. The dashboard still looks impressive. But it’s blind to nuance.

An AI tool might flag an employee for termination because of low performance metrics. The manager who checks that flag then learns the person had a family crisis. That context never showed up in the data. What would the AI know about that situation? Human review means asking follow-up questions, weighing extra facts, and judging things in context. Without that pause, the wrong call becomes a permanent mark on someone’s record.

Regulators now expect workers to apply that level of oversight. Trust your instinct when a machine report seems off. Minor changes and swap requests can pass with a quick glance, yet hiring, firing, or promotion decisions should never fall entirely to algorithms. Humans catch what algorithms miss. Different choices need different levels of input, so map them out ahead of time.

You get better results when managers can question and override AI suggestions instead of going by the scores alone. Give your team clear rules for when to step in — that strategy pays off in fairness and accuracy. Try adding extra checks for the big moves, then ask yourself, “How did we click through without thinking last time?” When you’re bombarded with AI alerts, you risk approving everything without review, which defeats the point of human oversight.

Algorithms can still make harmful mistakes because they inherit bias and miss context you take for granted. Choose which decisions demand your judgment and set rules that fit your workflow — this helps the tech work for people, not the other way around. The machines still need our help. Without that partnership, small errors snowball into costly legal and morale problems. Take the time to align the code with your ethics before you click approve.

The 2025 Regulatory Environment for AI

You can actually see how workplace AI laws are changing faster than most HR teams can keep up — as you might expect, that creates real stress for teams. Many leaders feel squeezed between the push to innovate and the need to follow the laws.

The EU AI Act now takes the lead worldwide with enforcement that kicks in 2025, so you have clear deadlines to meet, meaning you must map out your steps well in advance and bring your legal and tech teams in early. Teams still exploring pilot projects already feel the clock ticking.

Compliance gets very tough when states write different rules. Federal executive orders in the US usually lean toward innovation and place fewer limits on AI. At the same time, places like Colorado have their own risk assessment and documentation requirements. Multi-state employers end up steering through a patchwork of standards. Each jurisdiction seems eager to stamp its own priorities on the technology.

Your work will hinge on good documentation. Documentation takes time but prevents problems. Almost half of the managers expect governments to back new ideas while still handing out penalties for incorrect use. That balance can be hard to keep, so treat your records as your most reliable friend. Review your filing process and make it easy for everyone to find what they need. Well-ordered logs also help your data scientists trace issues when an algorithm drifts.

Don’t assume that your AI vendors cover all compliance for you. They might not follow every rule you have to meet. A quick vendor pitch shouldn’t replace your own check. Build your own audit schedule and set up verification steps instead of accepting vendor assurances at face value. Run a tabletop exercise now and then to prove your evidence holds up.

You need to notify all your people when the AI makes decisions about them. Most of the new laws need clear notices so employees know how a tool can affect their work. Keep the message quick and leave room for employee questions. That transparency builds trust and cuts down on hallway rumors.

The alternative — bigger fines and employee legal problems — comes with much steeper costs in money and reputation. You’ll save more time and stress later if you set up clear processes ahead of time.

You don’t have to wait for every rule to be crystal clear before you move ahead — build a flexible compliance system now, add review checkpoints every few months, and adjust fast as the laws evolve.

Empower Your Team with AI Support

You might feel excited and a bit overwhelmed by how AI can change your tasks. When you face those changes, you can build a work environment where people feel heard, know that their ideas matter, and feel safe. The learning curve can be cut back on. You’ll find that teams trust a system more when they see how it works and have a say in its use. Talk through each step and listen to the problems.

That shared control turns AI from a strict set of laws into a team member you can all use. When you make the data handling clear, check for bias, and bring your team in at key steps, you balance speed. Good oversight doesn’t slow you down — it gives everyone the confidence to move ahead without worrying about hidden problems. Small steps make differences over time. Does that mean more meetings? No — a few quick check-ins work. You can also keep people up to date to protect those you serve and help your team learn and grow.

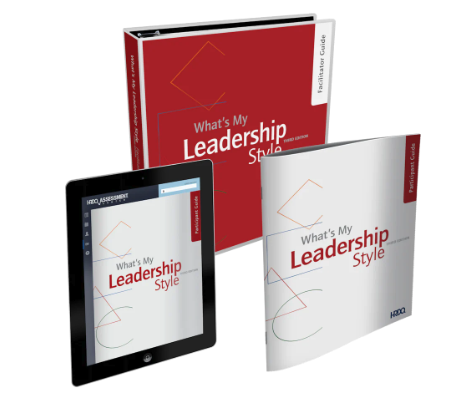

Next, you can join HRDQ-U, our learning community built for pros who want to sharpen their skills with easy-access webinars, podcasts, and blogs. Get started with our on-demand library and keep up with new ideas in HR and leadership. Check out our webinar, Use AI in the Workplace Responsibly with W.I.S.E. A.T. A.I. Megan guides you through tips that bring wisdom, fairness, safety, and openness to your AI projects and shows you how to build helpful prompts even if these tools are new to you.

Finish by exploring What’s My Leadership Style from the HRDQstore — it helps you find your own way of leading with confidence and a deeper, clearer sense of who you are every single day at work.